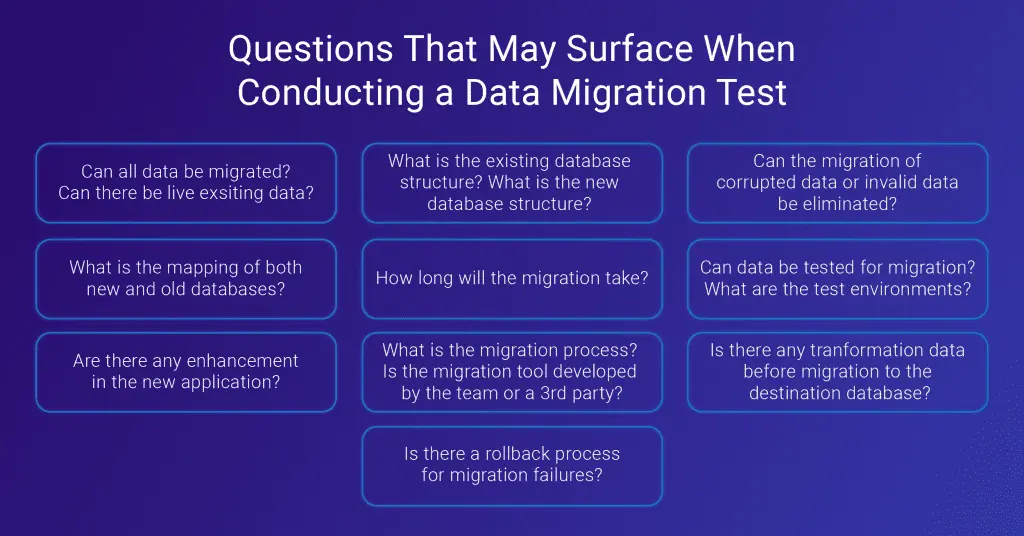

In software testing, data migration testing is conducted to compare migrated data with original data to discover any discrepancies when moving data from a legacy databases to a new destination. As organizations move their operations to the cloud—one of the foundational pillars of digital transformation— data migration testing ensures the integrity of the mission-critical systems and business as usual for business users.

Data can be migrated automatically through a migration tool or by manually extracting data from the source database and inserting the data into the destination database.

Data migration testing encompasses data–level validation testing and application-level validation testing.

Data-level validation testing

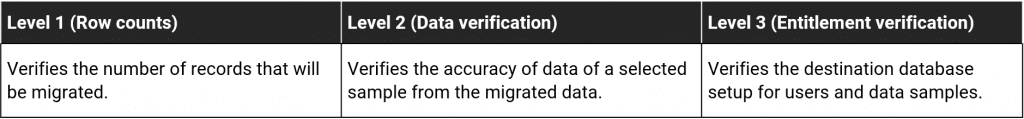

Data-level validation testing verifies that data has been migrated from multiple databases to a common database without any discrepancies. During data level validation testing, data will be verified at three levels.

Application-level validation testing

In application-level validation testing, a software tester verifies the functionality of a sample migration application that was migrated from an old legacy system to the new system. Application-level validation testing ensures smooth running of the migrated application with the new database using following validations:

- After migration, log in to the new application and verify a sample data set.

- After migration, log in to legacy systems and verify the locked/unlocked status of accounts.

- Verify customer support access to all legacy systems, despite the user being blocked during the migration process.

- Verify whether new users are prohibited from creating a new account in a legacy system after launching the new application.

- Verify immediate reinstatement of user access to the legacy system if migration to the new system fails.

- Verify the termination of access to legacy systems at migration.

- Validate system login credentials for the new application.

Software testing: Test Approach for data migration testing

Data validation test design

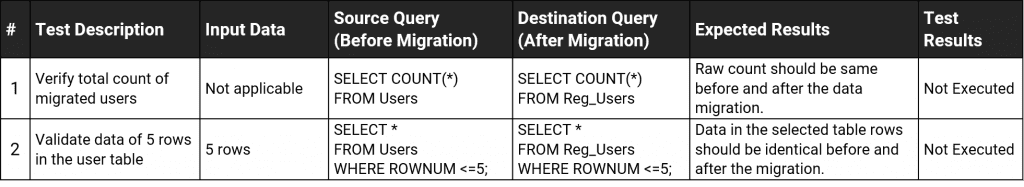

When you test database migration, it is important to create a set of SQL queries to validate the data before (source database) and after (destination database) migration. The validation queries can be arranged in a hierarchy and it should cover the designed scope.

For example, to test if all users have been migrated, it is essential to check how many users are in the source database and how many have been migrated. Checking the raw counts of each database will ensure this.

Take a sample data set from the source and compare the data with the destination data in the database. Testing tips:

- Always take the most important dataset and data that have values in all columns

- Verify the data types after the migration

- Check the different time formats/zones, currencies etc.

- Check for data with special characters

- Find data that should not be migrated

- Check for duplicated data after migration

A sample test case can be created as shown below:

Test environments

Test environments should consist of a source database copy and a blank isolated destination database. A tester can migrate data using a migration tool, which will facilitate the migration both table-by-table and using a set of reference tables. The tool should be able to accommodate a large data load since the data can include data from historical databases.

Data validation test runs

The database migration process must be completed prior to the test depending on the test design.

Reporting bugs

If the migration test fails, it is important to report the bug with the following information:

- Number of failed rows and columns

- Name of the failed object

- Database error logs

- The query used to validate the data

- User account information used to run the validation

- Date and time of the test

- Semantic errors

Tips for creating an effective data migration testing approaches

- Users should be able to access existing data and post migration data easily without any issues.

- Performance of database should be the same or better after the migration.

- Note the duration of the migration. A duration of a migration can be long, running the risk of application downtime.

- Have a copy of the source databases to conduct re-tests at any time on a new database. It will also help reproduce the bugs.

- Corrupted data should not be migrated to the destination database, and necessary actions should be taken to resolve the corrupted data.

- Get stakeholders involved the migration plan, as their permission to access different data sources could be mandatory.

- Make sure there are no inconsistencies in currency, date and time, time zone fields, and decimal points of currencies.

Click here to read more about Fortude’s software testing and QA solutions.

Related Blogs

Subscribe to our blog to know all the things we do