How would you react to a 10-second application/website delay every time you click on a link/button or perform an action in a user interface?

Slow loading times will inevitably drive frustrated users away from a website, and may even hamper return visits. In the recent years, the acceptable page load time for most users is 5 seconds (or less).

However, a recent study conducted by Forrester Consulting and recommended by Google, notes that the average response time is two seconds. Furthermore, 40% of the customers will abandon an e-commerce website if it takes more than 3 seconds to load a page. Quick response time is more important than ever before to ensure positive user experience.

But how important is response time for ERPs? Your ERP is the heart of a business and its performance is crucial to ensuring business continuity. Every minute of downtime and delayed response time for your ERP system is a hit to the bottomline so it is imperative that the ERP operates at peak performance.

The Importance of Performance Testing in ERP projects

What is performance testing?

Performance testing is a type of non-functional testing which checks how the system performs in terms of responsiveness and stability when tested under different workload conditions.

It uses a unique set of software tools to simulate the user load on a system.

Performance testing goals include evaluating application output, processing speed, data transfer velocity, network bandwidth usage, maximum concurrent users, memory utilization, workload efficiency, and command response times.

When should you consider performance testing for ERP?

Organisations should opt for performance testing during:

- New ERP implementation

- ERP Rollout

- Custom interface/SDK development

- Version upgrade

- System Integrations

Types of Performance Tests for ERP

- Load Test – The system is tested for a specific expected user load. This ensures that there are no day-to-day issues in performance and can handle normal working conditions.

- Stress Test – The system performance is tested outside of the parameters of normal working conditions by simulating a number of users that greatly exceeds expectations. The goal of stress testing is to measure the software stability and identify at what point does software fail.

- Endurance Test – Also known as soak testing, this evaluates how the system performs with a normal workload over an extended amount of time.

- Spike Test– This evaluates the system behavior when there are extreme variations of traffic to the system by simulating substantial user load increases within a short amount of time.

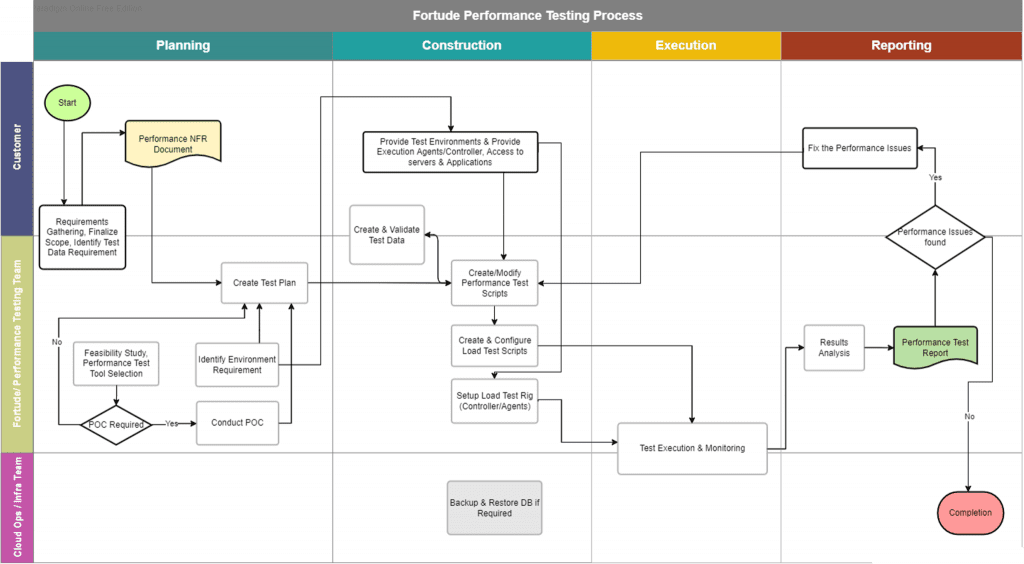

Fortude’s ERP performance testing process

At Fortude, we adhere to the following process to ensure performance requirements are met:

- Gather performance test requirements

This phase is considered the most challenging in performance testing. However, gathering the correct performance test requirements is necessary to execute an accurate test. If the non-functional requirements are properly captured and documented by the business analyst, the performance tester can start from there. Additionally, testers can gather usage statistics if a monitoring tool is in place.

But in many cases, the application is new, and the data is unavailable for the performance tester. Therefore, to get the performance test requirements from a non-technical user, the performance test lead will start a discussion by asking simple yet necessary questions without using technical jargon.

Eg:- What is the expected user load?

How fast do you expect a page to load?He will educate the customer with the performance terms and concepts along with examples, to better understand the customer’s expectations.

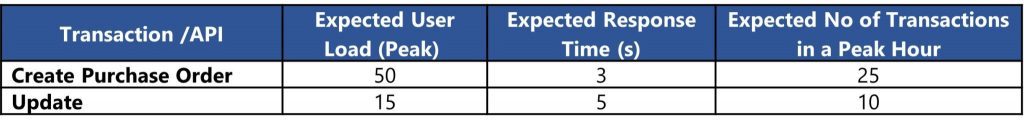

The following requirement elicitation format helps you to gather input from multiple teams. It is a simple table that allows you to elicit all requirements in the same format:

- Feasibility Study and test tool selection

- The performance Test Lead and the performance team will conduct a feasibility analysis considering the requirement, the application and the environment.

Selection of the right set of tools for the job is necessary at this stage to complete the performance test successfully. Multiple factors including the tool features and customer budget must be considered to finalize the performance tools. - Conduct a Proof of Concept if required

The team will plan and conduct them before proceeding any further. This will ensure any last-minute bottlenecks won’t arise due to technical limitations.,

- The performance Test Lead and the performance team will conduct a feasibility analysis considering the requirement, the application and the environment.

- Create the Performance Test Plan

The performance test plan will act as the blueprint of the performance test activities and is prepared by the Performance Test Lead. This document contains the performance test objectives, scope, approach, entry & exit criteria, acceptance criteria, environment & tool requirements, deliverables & risks.

Once the customer signs it off, the performance team will use it as the go-to document throughout the performance testing phase

- Create and validate test data

Availability of a proper data set is a must for any type of testing to be successful. However, a performance test requires an extensive data set in the system under test. Manual creation of such a dataset is unfeasible.

There are different approaches to creating large amounts of data for testing. For example, using data generator tools, restoration of a production database, and using tools to automate data creation, are all potential approaches. The selected method will vary depending on the project and requirement. The performance test engineer must validate the data set before executing the test.

- Develop performance test scripts

Using the selected performance test tool, performance engineers will record the test scenarios and configure and test them before the actual performance test. All test scenarios identified during the requirement gathering phase will be converted to performance test scripts to cover all use cases. The performance test lead will review and validate the scripts and provide feedback until all scripts are finalized.

- Setup and Configure Load Generation Environments

As specialized tools are being used to simulate the user load required for the performance test, a dedicated load generation environment is required. The test tool will use the load generator (or node) to emulate the end user’s behavior. The number of load generators required will vary depending on the test size. For example, three load generators were required to simulate 1000 users on Infor M3 during a particular implementation.

- Test Execution & Monitoring

Once all the scripts, test data and environments are ready, tests will be executed by the performance engineer. Generally, a performance test will run continuously for about a 1-hour duration. During the test execution, response times, network utilization, database, CPU, and memory utilization will be monitored. Specialized monitoring tools such as New Relic, AppDynamics, and Datadog can be used for monitoring. In some instances, monitoring is done with the help of the CloudOps/Infrastructure team.

- Results Analysis

Results analysis is the most important technical component of performance testing, which requires prior experience and technical expertise to analyze the test results which come out of the test tool. A skilled performance engineer will identify the patterns of the results and find any bottlenecks using the test results and graphs. By comparing the results with the NFR, they will determine the slow-performing scenarios/APIs and recommend improvements to the system.

- Create a performance test report

The performance test report will document all the findings, errors, graphs, bottlenecks, and recommendations.

This will contain all the necessary information for the relevant stakeholders to make the Go/ No Go decision and for the developers to make any performance improvements.

- Retest if needed.

`

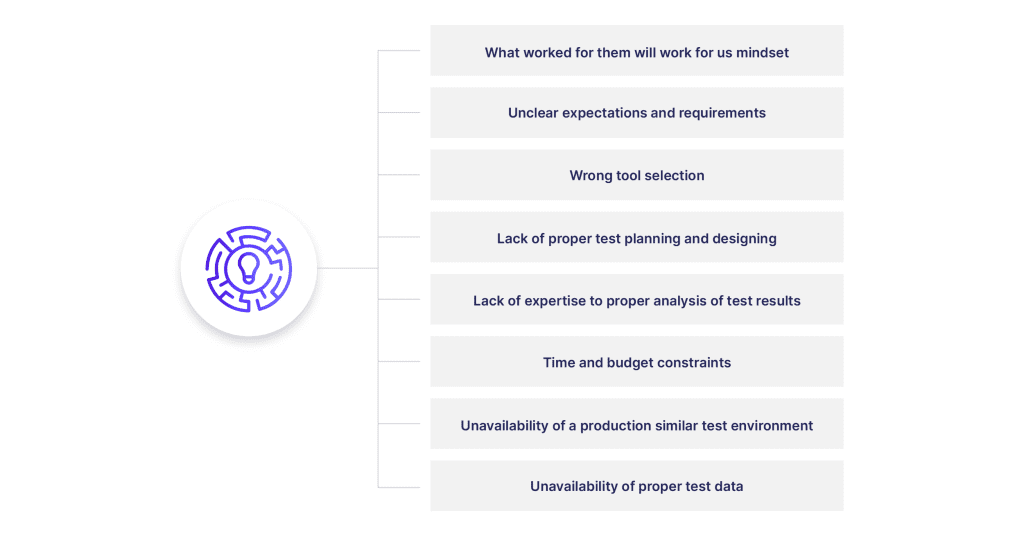

Common performance testing mistakes

Performance testing may not provide the expected outcomes if we fall prey to some common mistakes. This may result in the business experiencing performance issues which escalate to the production environment, which in turn will impact the business negatively.

Fortude has extensive expertise in successfully conducting performance tests in multiple domains, including Fashion, F&B and Healthcare. Our processes and framework sare designed to eliminate such mistakes, ensuring accurate performance test outcomes. Speak to our experts to learn more about our suite of testing services, including functional, performance, security and mobility testing.

Subscribe to our blog to know all the things we do